An Electronic Document Filing Disaster

Did you ever create folders on your computer to help you organize your documents? Did it seem like a good idea at first, but later you realized your folders did not fully categorize your ideas, so you added some more folders inside your existing folders? Did you notice that you repeated this process a few times and after a while you had so many folders that you stopped putting documents in the correct folder when you were busy, because it was too much trouble?

If any of this sounds like you, do not worry; you are not alone. Many individuals and companies have gone through this cycle; often many times. For some, the end is to purchase a document management system. These systems offer the promise of better organization for documents in addition to security, audit controls, and much more.

With a shiny new document management system installed, many library administrators proceed to setup their ideal library structure. They have had the benefit of creating and recreating the structure many times on their old shared folder based systems, so they are experts. Of course, their structure is an elaborate and accurate taxonomy of their document management requirements.

Soon they discover users are misfiling documents or not putting them in the document management system at all. Others are complaining about how long it takes to file a document. Upon investigation it turns out that some users are unsure how the complex taxonomy applies to their document or their document should be in two or more places at once. In any case, it is enough trouble that are more likely to file it on their local drive “temporarily” and get around to putting it in the document management system when they have more time.

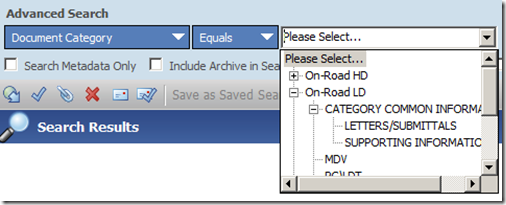

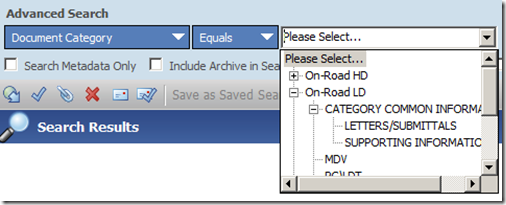

In the same environment, when someone wants to find a document they are faced with digging through the structure to get what they want. The only other option they have is to perform an unstructured search and hope the document contents will help to reveal its location. A user wanting to find all timesheets from last week in my example screen shot above would need to drill into each project down to the timesheet folder and then look to the document name or modified date to hopefully find the timesheets with last week’s entries.

Of course, the obvious solution to finding the timesheets is to add a ninth level of hierarchy for each week and ask users to put their timesheet into the correct folder. I think you get the point.

How did we get to think this way? Do not blame yourself. Organizing files into a hierarchy started with

Multics and

Unix back in the early 1970’s and dramatically broadened with

MS-DOS 2.0 in the early 1980’s and nearly every operating system since. We all have many, many, years of conditioning.

Easy Document Filing

You can avoid the look of befuddlement with a small change to your thinking. First, extract the

metadata from your folder hierarchy. It is not as tough as it might seem at first and there is a bit of guidance

here. You can organize this metadata according the types of documents you are storing; some will need more and some less. You may decide that some metadata should be mandatory and some optional.

Your document management system will almost certainly give you a place to configure this metadata; after all, that is what they are supposed to be good at. Some document management systems will directly use the term metadata. Others will substitute indexes, properties, tags, labels, and possibly others to mean the same thing. The point is that this is information that describes the document without the need to modify the contents of the document.

Folders are very good for setting access control for a variety of documents. Create as many as you need, but no more. You do not want users to need to think too hard about putting documents away. By restricting permissions for users to only areas related to their job, they may only need to look through a very small list of folders; perhaps only one!

With a metadata based approach, when a user stores a document they will be asked to identify the document type. Some document management systems may call this the document schema or document class. They will also be asked to add values for the metadata that has been specified for the document type. Rather than navigating a tree of folders to find the right place to store the document they will see one piece of information at a time and the specific location where the document is stored will be less important. Where a set of metadata values can be predetermined the user may be able to select from a list to help ensure accuracy. Depending on the source of the document and the capability of the document management system it may be possible to automatically extract metadata directly from the document.

I often hear library administrators complain that they cannot rely on users to fill out metadata. Unfortunately these are the very same users can will not search the folder hierarchy to store the documents in the correct place either. The advantage with the metadata approach is the question and answer style of collecting the metadata. In the worst case, if users are in too much of a hurry to enter all the metadata, at least they would be able to easily put the document in the document management system rather than their local drive.

An added benefit of adding metadata to a document is that the information lives with the document regardless of where the document is located. When a folder structure is used to represent meta information about a document the relationship is indirect. Moving a document from one part of the library to another will cause the original meta information to be lost and new meta information to be added regardless of whether or not that is what was intended.

Locating Documents Easily

Library administrators will often cite the ease of locating documents as the reason for creating the deeply structured folder hierarchy. The reality is that is far from the truth. When was the last time you browsed through a tree of options to find something on the web? You almost certainly have a few personal bookmarks, but you probably find most things using a search engine like

Google. This sort of searching capability is the hallmark of many document management systems.

Most document management systems have a method for individuals to bookmark documents that are important to them. Other names for a bookmarking like feature include shortcuts, favorites, and virtual folders. In this case it is not up to the library administrator to predetermine what you should care about and how you should find it. The individual user gets a chance to customize their environment according to how they work.

When it comes to searching, I know many of you are thinking that Google does not always find everything you are looking for. You do not want to loose your critical documents when you cannot think of just the right search term. It is true that document management systems can often use the text contents of documents to perform a full text search, but even better results are possible when the search is looking for specific metadata. In fact, you can expect that with metadata searching your chances of finding a document will never be worse than with hierarchical folders and will generally be much better.

I use

Gmail extensively and it has a very good search capability, but I can enhance it. Gmail allows me to add one or more labels (metadata) to my email message and it allows me to qualify my search by specifying the labels in addition to my text. This already provides a huge improvement on helping me find my documents and it is an enormous leap over sorting my emails into individual folders in

Microsoft Outlook.

Back in the document world, users have typical ways they need to find their documents on a day-to-day basis. These need to fit with the operational processes that they support in their jobs. They tend to remain fairly constant. In my previous example an accounting clerk was looking for last week’s timesheets for all projects. They do this search every Monday to update their project accounting, so they do not want to worry about forming a special search each week. Document management systems provide a solution here as well.

Many different types of software systems containing files have a method of saving a search to use over and over again. It is a way of looking at your documents as though they were in a folder, but the folder is virtual and dynamic rather than hard wired into a hierarchy. One of the first examples of this was the saved search in

BeOS.

Apple MAC OS X borrowed this as “Smart Folders” and Microsoft Outlook has now has “Search Folders”.

Adobe Lightroom has “Smart Collections”,

M-Files has “Dynamic Views” and

FileHold has “Saved Searches” to name a few.

A common complaint about using metadata instead of a folder hierarchy is that simple metadata fields cannot convey a complete taxonomy that is best reflected in a tree. Document management systems can often solve this problem by simply providing a tree structured metadata list such as the drill down fields in

FileHold.

In Conclusion

- A document management system will help you store and organize your documents.

- Computer users have years and years of hierarchical folder training in their heads that will get in the way of implementing a better approach to storing documents. Help them get over it.

- Use folders to control access not to represent meta information.

- Find a document management system that gives you the capability to create folders that are virtual and dynamic based on search criteria against metadata.